The term "data lake" was coined by James Dixon, then CTO of Pentaho, in 2010. Dixon used the metaphor of a lake to contrast with the more structured "data mart" (which he compared to a store-bought bottled water). In his original blog post, he described a data lake as a large body of water in its natural state, with water flowing in from various sources. The idea was that data could be stored in its raw, unprocessed form, waiting to be used.

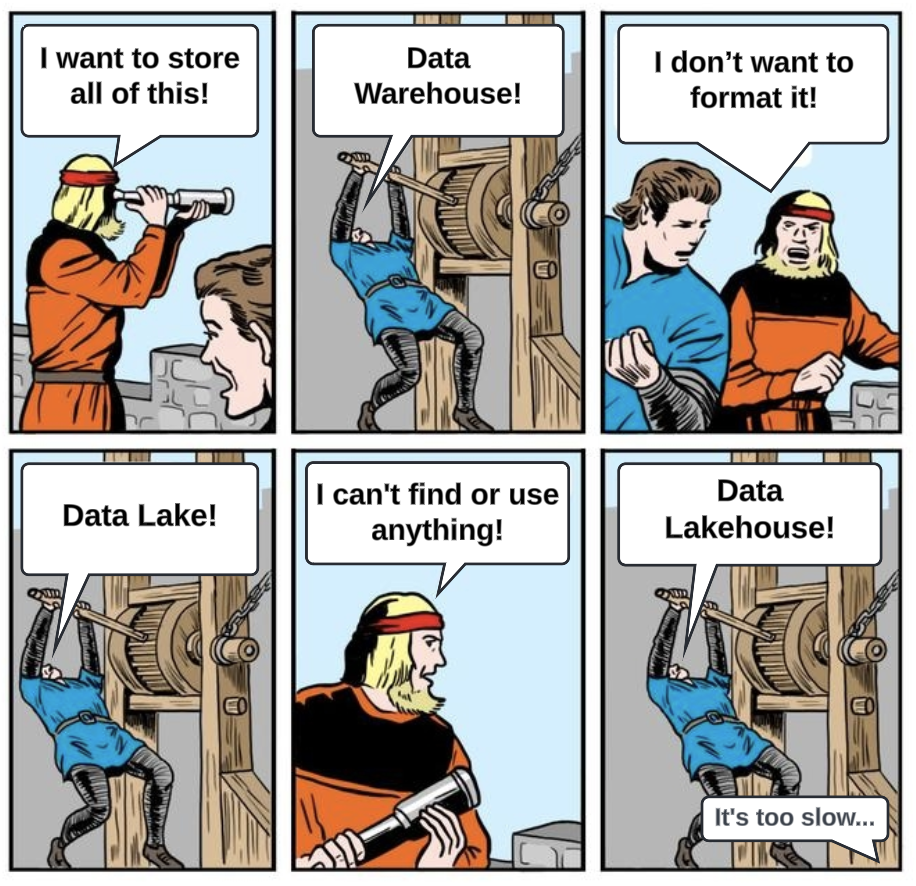

However, the reality turned out quite differently from this initial vision. Today, when people talk about data lakes, they often describe something more complex and nuanced.

Currently, most modern data lakes are actually more like "lake houses" - a hybrid between traditional data warehouses and the original data lake concept. They incorporate more structure, governance, and processing than originally envisioned, while still maintaining some of the flexibility of raw data storage.

The concept has evolved from Dixon's original vision of a pure, natural body of data to something more engineered and managed. We're seeing a departure from the original concept that represents a necessary maturation based on real-world experience and needs. The most successful implementations now combine the flexibility of data lakes with the structure and governance of traditional data warehouses, recognizing that both aspects are necessary for effective enterprise data management.

Interestingly, this evolution mirrors a broader pattern in data management: initial excitement about a new, more flexible approach, followed by the recognition that some level of structure and governance is necessary for practical use. The same pattern occurred with NoSQL databases, which have largely evolved into "NewSQL" systems that incorporate more traditional database features.

The key to success is finding a balance between flexibility and structure, ensuring that innovation doesn’t come at the cost of usability, security, or efficiency. This is why the next generation of data platforms must learn from past mistakes while offering a fundamentally improved approach.

While Matterbeam is not a data lake, it solves many of the problems that data lakes were originally meant to address—but in a more efficient, scalable way. Matterbeam is first and foremost optimized for data movement and transformation, but Matterbeam has a free storage layer that consists of immutable logs - we store the data, metadata, and the history of all the changes to the data. The adaptive data transformation not only detects schemas automatically but also adapts and converts data into the required formats for downstream destinations. This enables Matterbeam to capture data in its raw form but without prematurely locking it into a specific structure. Data remains flexible, waiting to be transformed and moved only when needed, rather than forcing upfront processing decisions.

Unlike traditional data lakes, which often become unmanageable data swamps, Matterbeam ensures that raw data remains discoverable, secure, and useful through its built-in observability and transformation capabilities. It also can collect and emit data to any data lake of your choice without the need for third party tools. In essence, Matterbeam provides the adaptability of a data lake while sidestepping its biggest pitfalls, offering a more intelligent foundation for modern data infrastructure.

If you're interested in testing or deploying Matterbeam for a particular data challenge we'd love to talk.