The developments of generative AI in the past few years have been amazing. There are promises of AI agents revolutionizing everything from customer service to software development. Now they're coming for your data infrastructure. The pitch is seductive: AI agents will automatically handle data integration, quality, and governance. They'll learn your systems, understand your use cases, and seamlessly connect everything together. There's just one problem: it's fantasy.

Let's be honest: who's actually happy with their data infrastructure? Look around. The same complaints we heard a decade ago are still echoing through board rooms and engineering meetings today. Data silos. Broken pipelines. Quality issues. Integration headaches. The tools have gotten shinier (and more expensive), but the fundamental problems persist.

If humans, with all their context and understanding, consistently fail at effectively connecting data systems, why do we think AI will do any better?

AI agents don't exist in a vacuum. They learn from examples: our examples. And what examples do we have? Decades of brittle, broken systems. Pipelines that crack under pressure. Integration patterns that fall apart at scale. We're essentially asking AI to learn best practices from a history of worst practices.

Where will these AI agents learn how to properly connect systems? From our current landscape of duct-taped solutions and prayer-based architectures? The same architectures that break down as soon as requirements shift or scale increases?

The industry's response to data complexity has always been more automation. Smarter ETL tools. Automatic data catalogs. Integration platforms. Each promised to solve our problems by automating away the complexity. Each pushed the breaking point a little further out. But they all eventually hit the same wall, fundamental limits in how we think about data flow.

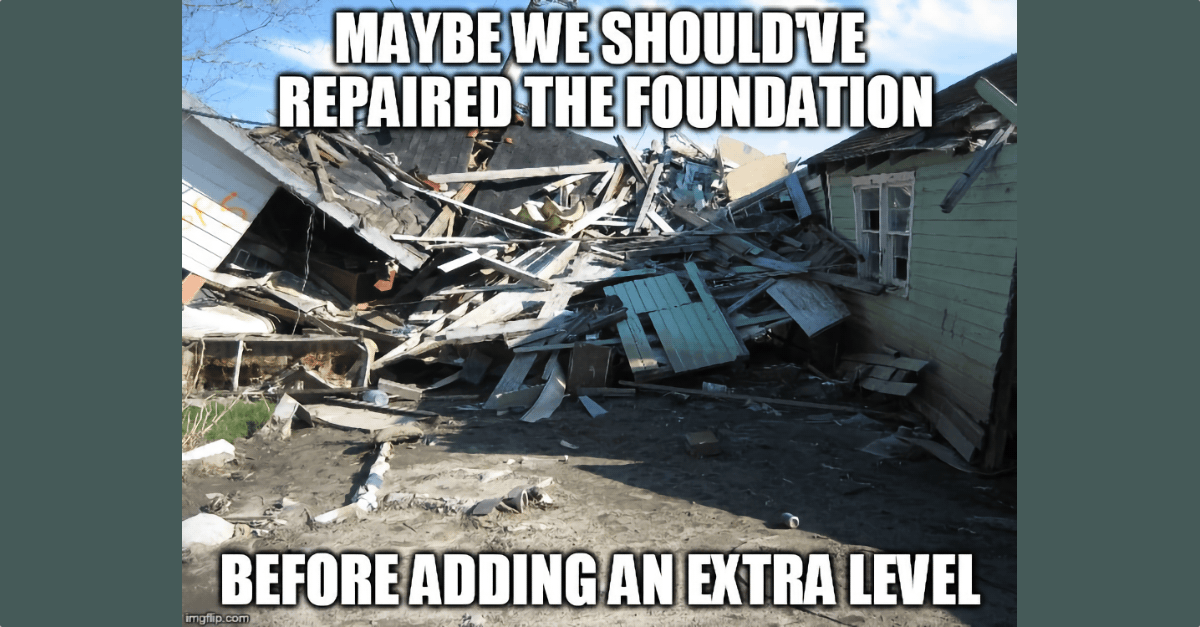

AI agents are just the next step in this automation arms race. Yes, they might create even more hyper-automated pipelines. They might generate more code, faster. But they're still building on the same flawed foundation. It's like using a rocket engine to power a horse and buggy, you'll go faster, but how long till the wheels come off.

Data quality is already a nightmare. Human data engineers, with years of domain expertise, regularly introduce subtle issues that take months to discover. Mismatches between producers and data users create constant breakage. Now imagine AI agents, trained on this messy status quo, making assumptions about data distributions, formats, and relationships. It's a recipe for disaster.

More worrisome is that many of these won't be obvious failures. They'll be subtle, insidious issues that creep into your data over time. By the time you notice, the damage will be done, and good luck tracking down where it started in a black-box AI system.

Here's something nobody's talking about: reproducibility. Data infrastructure isn't just about moving data, it's about being able to trace, audit, and replay every transformation. Most AI agents, especially those built on large language models, are inherently non-deterministic. The same input might produce slightly different outputs each time. Work is being done to improve this, but the foundation is still random.

In data infrastructure, that's a big problem. How do you debug a pipeline when you can't reproduce the exact behavior that caused the problem? How do you audit transformations when the process itself is probabilistic?

Companies like Databricks are pushing the lakehouse as the foundation for AI-powered data platforms (naturally). But this just highlights the industry's stubborn attachment to one-size-fits-all thinking. Yes, AI might help manage your lakehouse better. It might generate prettier dashboards. But what about the countless use cases where a lakehouse architecture isn't the right fit?

The hard truth is that there are no shortcuts to good data infrastructure. AI agents might automate some tasks, but they won't solve the fundamental problems that have plagued our industry for decades. Not because they're not powerful enough, but because they're learning from and building upon our own flawed approaches.

What we need isn't more automation of broken patterns—it's a fundamental rethink of how data flows through our systems. Until we fix the foundation, all we're doing is building fancier ways to fail.

There is an irony, that by extending the existing flawed models, we're actually limiting AI's potential. The real breakthrough will come when we build the right foundation first, one that treats data as dynamic and flowing rather than static and siloed.

This is where Matterbeam's approach changes everything. By focusing on immutable, transformable, and replicable data flows, Matterbeam creates a predictable, mechanical foundation that AI can actually build upon. Instead of trying to patch together brittle pipelines, AI agents could work with clear, traceable data flows. Instead of making blind assumptions about data quality, they could understand the precise lineage and transformations of every piece of data.

Think about what this means: AI agents wouldn't be wasting their potential trying to navigate broken architectures. They could focus on what they do best—finding patterns, optimizing flows, and generating insights. With a solid foundation like Matterbeam, AI becomes a powerful accelerator rather than a band-aid on a broken system.

The future isn't about AI magically fixing our data problems. It's about building the right foundation first, then leveraging AI to take us further than we could go alone. Only then will we unlock the true potential of both our data and our AI systems.