There's a version of a stat that gets thrown around a lot. Data teams spend 80% of their time on data preparation or cleaning. Eighty percent.

We've just... accepted this? Like it's some law of nature? As if the universe decreed that for every hour of actual utility, you must first sacrifice four hours wrestling with data quality issues?

Here's what I think. Your data isn't actually dirty.

You know what happens every Monday morning at most companies? Janice runs a "data cleaning" job. Deduplicating customer records. Standardizing addresses. Normalizing those product names. Filling in missing values with carefully calculated averages.

And by Monday afternoon? Jenn in marketing is asking why their campaign targeting is off. Because it turns out that customer with two addresses... they actually DO have two addresses. One for billing, one for shipping. Your deduplication murdered a legitimate business reality.

Bill in finance is wondering why the quarterly numbers look weird. Because those "missing" values that were helpfully filled in? They weren't missing. They were unknown. There's a difference. Unknown is information. It tells you something didn't happen, or couldn't be measured, or hasn't been determined yet. But now it's gone, replaced with a pleasant lie of statistical averaging.

This is what we do in the name of 'clean' data.

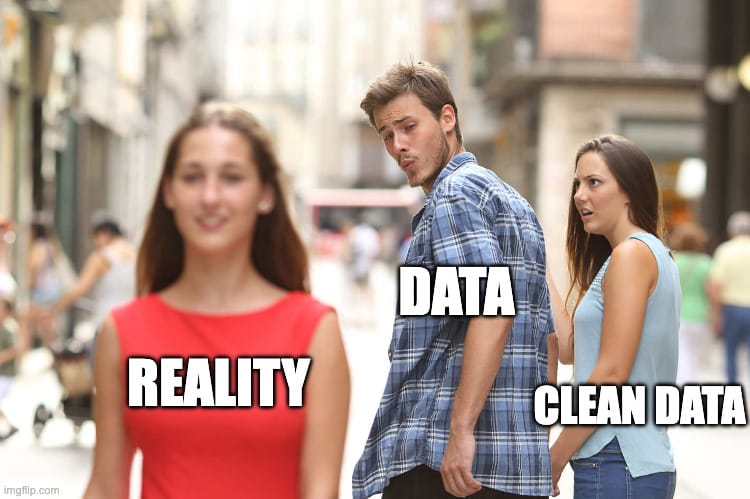

We take reality... messy, complex, contextual reality... and force it through a meat grinder of transformations until it fits neatly into a predetermined schema for a predetermined use case. Then we pat ourselves on the back for having "clean" data.

Here's something fun to think about next time you're in a data meeting. (Yes, I just used "fun" and "data meeting" in the same sentence.)

What's clean for finance is dirty for marketing. What's clean for marketing is dirty for customer support. What's pristine for customer support is absolutely toxic for machine learning. AI? Who knows.

When you "standardize" customer names from "Bob" to "Robert"... information was destroyed about how that customer wants to be addressed. When you convert all timestamps to UTC, you erased local context that might explain why sales spike at weird hours. When you normalize product categories, you eliminated the organic taxonomy your customers actually use.

You're not cleaning. You're choosing winners and losers. You're saying finance's context matters more than marketing's. Or that today's use case matters more than tomorrow's.

In most companies, once you've "cleaned" it, you can't go back. That information is gone. Forever.

I was in a meeting once where an engineer said something that stuck with me. "The problem with our data is that it keeps changing shape."

No. No, no, no.

The data isn't changing shape. Reality is just... being reality. Things don't remain static. Your customers don't wake up thinking "I better make sure my behavior fits neatly into their PostgreSQL schema." Your business doesn't evolve according to your data model's foreign key constraints.

We built these rigid pipes forty years ago when storage was expensive and we had one database doing one thing. We had to make choices. We had to pick a schema and stick with it.

But it's not the 1980s anymore. We don't have those constraints. So why are we still acting like we do?

OK, so here's where this gets interesting. And maybe a little heretical.

What if we just... stopped?

What if we stopped trying to clean data at the point of collection? What if we stopped forcing it into predetermined shapes? What if we stopped making irreversible decisions about what information matters?

This is what we built Matterbeam to do. Instead of cleaning and transforming data as it comes in, we store everything in an immutable log. Every event. Every field. Every weird edge case. Every "anomaly." All of it.

Preserving every bit of context.

I know what you're thinking. "That sounds like chaos."

But here's the thing, if you make it easy to transform...

When marketing needs customer data, they can transform it for their needs. When finance needs the same data, they transform it for theirs. When that random executive asks for a weird analysis that no one anticipated... you can transform it for that too.

The source data? Still there. Untouched. Ready to be transformed a different way tomorrow when requirements change. Because they will change. You already know they do.

One of our customers, literally told us they changed their entire company strategy based on what became possible with this approach. Not because we gave them cleaner data. But because we stopped destroying information in the name of cleanliness.

They had a two year migration planned. Instead they started getting value in months instead. Why? Because they didn't have to figure out the perfect schema upfront to smash their old system into. They could collect now, figure out the shape later. They could use pieces of it today, evolving and migrating bits as the use case became clearer.

You want to know what really gets me?

It's the insights we're losing. The patterns we can't see. The questions we can't answer. All because somewhere along the way, someone decided that data had to be "clean."

Those outliers you're removing? They might be your most valuable customers. Those inconsistencies you're smoothing over? They might be telling you about a problem in your business process. Those "data quality issues" you're fixing? They might be the early signal of a market shift.

But we'll never know. Because we cleaned them away.

And then the real sign that we're doing something wrong – the "quick ask" for something that's 10 degrees out of phase with the "clean" model... that now takes six months to build.

I'm not saying data quality doesn't matter. It does. Deeply.

I'm saying our approach is backwards.

Instead of forcing data to be clean at the source, what if we preserved everything and let each use case decide what "clean" means for them? Instead of making irreversible transformations upfront, what if we could replay and reshape data as many times as we need?

This isn't some pipe dream. This is what modern storage economics and computing power make possible. This is what companies using Matterbeam are doing right now.

They're collecting data in its natural, domain specific form. They're preserving all the context, all the weirdness, all the reality. And then they're transforming it... when they actually know what they need. Not when they're guessing what they might need someday.

Here's what I want you to think about.

What if that 80% of time you spend on data prep isn't necessary work? What if it's not even helpful work? What if it's actively harmful work that's destroying the very information you need?

What would you do with that time if you could get it back?

What questions would you finally ask if you didn't have to file a ticket and wait six months for a new pipeline?

What would you discover if you could see your data in a different shape tomorrow than you see it today?

Your data isn't dirty. It's trying to tell you something. Maybe it's time we stopped forcing it to shut up and started learning how to listen.

Want to see what your data looks like when you stop trying to clean it and start preserving it? Let's talk. We've helped companies cut "six month" projects down to days. Not by cleaning data faster... but by stopping the whole cleaning charade altogether.