You know that feeling when you've been doing something the same way for so long that you can't imagine any other approach? That's where Josh Pendergrass was when his company first started using Matterbeam.

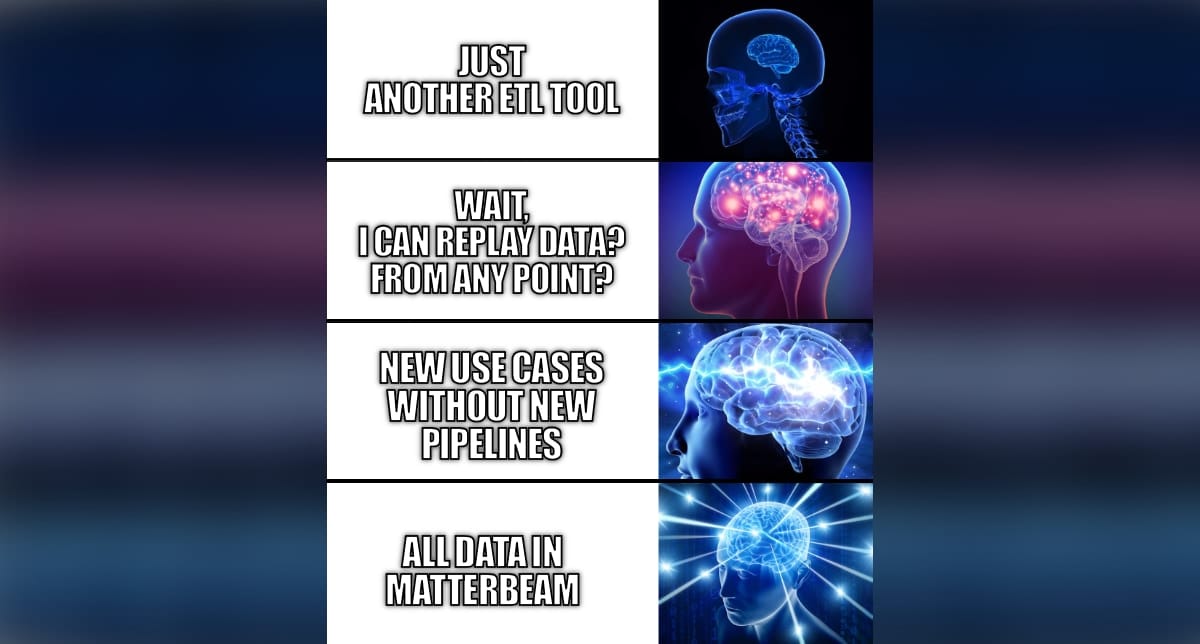

"At first I was like, that seems great. I don't know that I'll need that," Josh admitted during a recent conversation on DM Radio. He was talking about our immutable log... one of the things we get most excited about, but that initially seemed like just another backup feature to him.

Then something happened. Josh had a "light bulb moment".

Josh and his team at Broadlume (now part of Cyncly) had been collecting data with Matterbeam for a while. They were using it like any other data tool... moving stuff from one system to another, A to B, point-to-point. Nothing revolutionary (other than starting with a difficult source).

But then? They realized they could replay data from any point in time. Transform it differently. Send it to multiple destinations without rebuilding pipelines. Suddenly, the immutable log wasn't a feature. It was the feature.

"The light went off on like, oh my gosh, as long as we're collecting this stuff, I don't have to worry about setting a point in time where I want to connect this," Josh explained. "I can literally just make a change and it'll emit what I need and go exactly where it needs to go."

It gets even more interesting. Broadlume had been planning a massive system migration. They needed to replace a legacy ERP system (written in COBOL), but the traditional approach... syncing two systems, doing it all at once... was going to take two years.

So they made a tough call. They weren't even going to try. The timeline was just too painful.

Then Matterbeam.

"We realized after Matterbeam was already pulling this data that like, hey, we could use Matterbeam for this. We could literally cut two years off of the timeline," Josh told us.

They didn't just shave off some time. They compressed a two-year migration into months. And then did it screen by screen, function by function, keeping systems in sync while gradually moving over.

Josh dropped another truth bomb during our conversation: "I could tell you like 97% of the data in the data warehouse is not used for anything. We're just dumping it all there so that we have it in case in the future we want to run a report on it."

Sound familiar? It even has a name, Gartner calls it "dark data".

You're paying for storage. You're paying for compute. You're processing everything just in case someone might need it someday. It's like keeping every single receipt from the last decade in your desk drawer because maybe, possibly, you might need to reference that coffee you bought in 2019.

With Matterbeam, instead of dumping, hoarding and hoping, they only write to their warehouse what they actually need, when they need it. The data is all there in the immutable log if they need to go back. But they're not paying to store and process the same stuff seven different ways anymore.

Perhaps the best validation came from an unexpected place. Josh mentioned that now, whenever there's a data project, the product managers come to him with the same question: "Can we use Matterbeam to make it faster?"

It's not the engineering team pushing a tool. It's the business side pulling for it because they've seen what becomes possible. They've starting thinking about data as a resource instead of a constraint.

One product manager needed data that would have taken six months to access through traditional pipelines. With Matterbeam six months became two weeks, and with the latest version of Matterbeam would have been possible in two days.

That frustration... that disconnect between having data and being able to use it... that's what we built Matterbeam to solve.

During the show, I tried to explain what makes this different. Think about your database internals for a second (stay with me here). Most databases have a write-ahead log that captures changes before they're organized into tables and indexes. It's how databases stay durable and consistent.

We took that concept and blew it up to organizational scale. Instead of being hidden inside one database, we made it the foundation of your entire data infrastructure. Your Snowflake becomes just one use, one materialization. Your graph database is another. Your real-time dashboard is another. All from the same immutable source.

Mark Madsen, who's been in the data space longer than most, put it perfectly: "You change the tools and the tool capabilities, you change what you can do with them."

Josh's parting words? "First I didn't really understand the immutable log and now I say you can pry it from my cold dead hand."

That transformation... from skepticism to can't-live-without-it... happens because once you experience data without the constraints of traditional pipelines, you can't go back. It's like trying to go back to dial-up after you've had broadband. Technically possible, but why would you?

The most gratifying part for me? Hearing from that data scientist who basically tackled me in the hallway saying, "Did you build this thing? This got me data in 30 minutes I've been struggling to get for three months."

That's not just a faster pipeline. That's a fundamentally different relationship with your data. That's a new kind of data freedom.

DMRadio - September 11, 2025

Want to see what this kind of data freedom could mean for your organization? We're not asking you to take our word for it. Start with one use case, like Josh did. You might just find yourself changing your entire company strategy too.